This is our second in a series of enterprise encoder reviews; in this review, we test Telestream Episode Engine. By way of background, Episode Engine is the highest performing option in the Episode family, with unlimited parallel batch encoding, Split-and-Stitch encoding (more later), extensive input and output file support, and simple clustering with other Episode installations on the same subnet. Available for both Mac and Windows, Engine costs $3,995.

In our tests, Engine proved to be a fast and competent H.264 encoding tool. It’s slightly behind Rhozet Carbon Coder in VP6 quality and far behind in WMV encoding performance and quality. Engine proved stable in all testing, and it offers simple clustering, which is a great way to share multiple encoding resources on the same subnet. In an enterprise environment, this might be the product’s most unique strength.

Episode operates around the concept of a workflow, which includes sources, encoders, and deployments, the three buttons next to Workflows in the button bar near the top of Figure 1. Sources can be a file, file location on local or network drives, or FTP locations. Encoders are encoding presets, while deployments are the locations to which Engine will deliver the encoded files, which can include local or networked drives, FTP locations, or YouTube.

Contents

Figure 1. The Episode interface, which is identical for all three versions

You create workflows by dragging sources, encoders, and deployments into the flowchart shown in the dark gray area in Figure 1. Once created, you click the Submit button to start the encoding, which starts immediately unless there are existing jobs in the queue. If there are, you can prioritize jobs by assigning each job one, two, or three stars to control encoding order.

Once you’ve created a workflow, you can save it for later reuse, including assigning it to a watch folder for automated operation. You can drive Engine manually via its GUI; set up watch folders on local, network, or FTP folders; or use a command line or XML-RPC interface. There’s also an Episode Developer API kit that’s necessary for some advanced output formats, including Microsoft Smooth Streaming and Apple HTTP live streaming (HLS).

Overall, the GUI works well, but several minor aspects could definitely be improved. First, as you can see in Figure 1, if the name of the encoder is too long, it gets truncated, irrespective of how large you make the interface. This makes it tough to tell which presets you’ve applied, which complicates many operations.

Second, there’s no easy way on the Mac to create folders for your own presets, which makes organizing and finding your presets difficult. Even on Windows, folder creation and usage is obscure. Telestream should improve these operations on both platforms. Finally, all users, not just product reviewers, care how long it takes to encode jobs. Telestream should compute and present this for all completed jobs in the status window.

Supported Formats

For a complete list of supported input and output formats, check out the Format Support chart. Here are the high notes: For input, the product supports most popular acquisition formats, including XDCAM (HD, HD 422, EX) in MOV format, GXF, and MXF. The product can input Red files up to 2K using the Red QuickTime plug-in, plus ProRes and DNxHD.

Single file output format support is very extensive, including VP6, H.264, WMV, VC-1, WebM, MPEG-1, and MPEG-2 in a variety of web and cable-oriented container formats, including 3GPP, and MPEG elementary, program, transport, and system streams. Again, however, using the GUI, Engine can’t output the special file formats and metadata files for Smooth Streaming and HLS; you have to use the API kit. Engine also can’t output for Adobe’s HTTP Dynamic Streaming (HDS), though this should change soon.

For mezzanine files, Engine can output ProRes on the Mac and on Engine for Windows when running on Windows Server 2008, and it can output Avid DNxHD in MOV format if the required codec is otherwise installed. For distribution, Engine includes output presets for Grass Valley, Leitch, and Omneon playout servers.

Regarding closed captions, Engine can pass-through EIA-608 and EIA-708 in AC-3, SCTE-20, and VBI in-band close captions when encoding MPEG-2 video, but not for H.264 or other streaming codecs. Engine can also convert NTSC closed-captioned VBI in-band information to EIA-608, EIA-708, or SCTE-20.

Controlling Operation

On multiple-core workstations, you control encoding efficiency by selecting the number of allowable simultaneous encodes in the Preferences dialog. This number ranges from one to the maximum number of cores available on your computer. For example, on my 12-core HP Z800 (24-core with hyper-threaded technology enabled), the maximum number of available simultaneous encodes was 24.

However, according to my technical contact, the ideal number of simultaneous encodes is codec- and job-dependent. For example, with the H.264 codec, which runs very efficiently on a multiple-core computer, you shouldn’t exceed more than five to six simultaneous encodes on a 12/24-core computer. On the other hand, with the older and notoriously inefficient VP6 codec, simultaneous encodes (up to 12) can improve multiple file encoding efficiency.

I tested performance in multiple configurations, which I detail in the performance section.

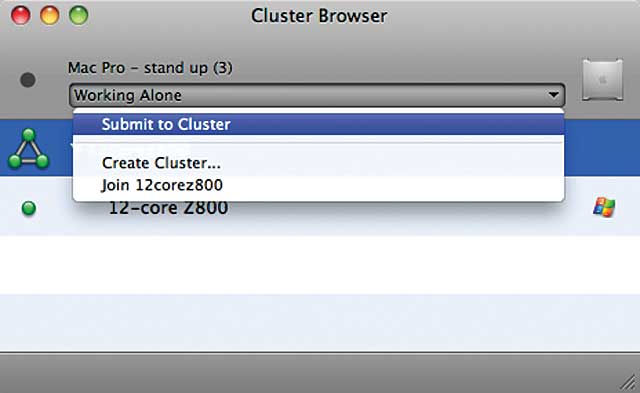

Sharing — Clustering

Clustering enables multiple installations of Episode, Episode Pro, and Episode Engine installed on the same subnet to work together, with each installation called a node. Operation is controlled via the Cluster Browser, shown in Figure 2, and there are three options: working alone, creating a cluster, or joining a cluster.

Figure 2.You control cluster operation in the Cluster Browser.

When working alone, as I am on the Mac Pro shown in the figure, you can submit a job to the cluster. The workflows will be rendered by the various machines in the cluster, not your node.

If you create a cluster with your node as master, you control how the jobs are allocated between cluster members. There are three options: round robin, which sends jobs to each node in order; hardware balanced, which sends more jobs to more powerful nodes, as determined by processor number, speed, and memory size; and load balanced, which uses the same capacity computation as Hardware Balanced but also considers the number of current jobs queued up on each node.

Finally, if you join a cluster, all jobs that you submit are allocated to the cluster according to the rules set by the master. Clusters are a win/win for all Episode installations; if your node has processing power to spare, you can join a cluster. If not, you can either choose to work alone or, in the Cluster preferences, choose to only encode jobs that your computer submits to the cluster.

Split-and-Stitch

Split-and-Stitch is a feature designed to accelerate encoding, though it works best in highly targeted scenarios. To explain, without Split-and-Stitch enabled, Engine encodes each file from start to finish. With Split-and-Stitch enabled, Engine divides the job into multiple segments that can be rendered by other computers on a cluster or by unused resources on a multiple CPU computer.

Performance improvements on a cluster will obviously depend upon the speed of other computers in the cluster. On a multiple CPU computer such as my 12 CPU/24-core HP Z800, the effect of Split-and-Stitch on encoding speed was codec- and job-specific. For example, in one test, I encoded a 52-minute 1080p test file to H.264 format with and without Split-and-Stitch. Without Split-and-Stitch, it took 15:34 (min:sec) to encode the file, while with Split-and-Stitch, encoding time was actually longer, averaging around 17 minutes depending upon how I configured Split-and-Stitch operation.

When encoding a 4:50 1080p file to VP6, the situation reversed itself, with Split-and-Stitch reducing encoding time from 16:57 to 3:07. Why the difference? Because the H.264 codec is much more efficient on multiple-core computers, and it consumes about 50 percent of CPU resources on my Z800 even when encoding only a single file. In contrast, VP6 is notoriously inefficient on multiple-core computers, averaging only about 8 percent CPU utilization during a single file encode on the same computer.

The bottom line is that your results will vary depending upon file length and number, the codec that you apply, and the extra resources available on your computer or cluster. For example, my technical contact advised that some Telestream customers realized 20x–30x real time H.264 encoding on clusters as small as four or five systems. This makes sense, since Split-and-Stitch lets you leverage cluster operation with long files that you would have to encode on a single computer without Split-and-Stitch. Still, it’s clearly a feature that you have to feel out in your own encoding environment, since its universal application can dramatically slow some encoding chores, as you’ll read later.

Format-Specific Encoding Options

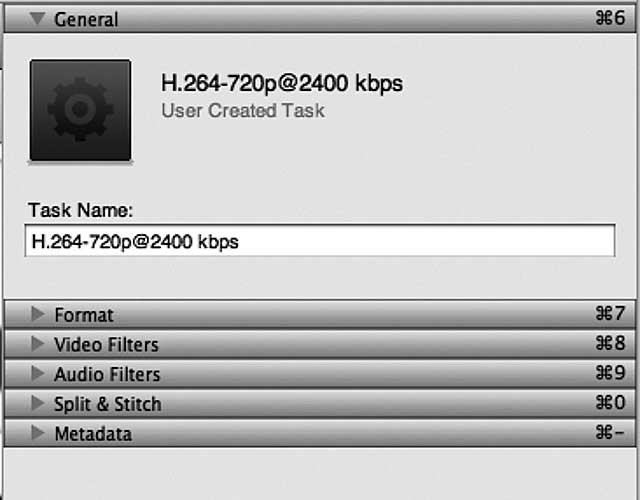

Engine ships with dozens of customizable encoders, each with five editable blocks, as shown in Figure 3; Format, where you adjust audio and video codecs and container format, set in and out points, or select intro and outro videos; Video filters, where you select video output parameters such as resolution and frame rate and apply video filters; Audio filters, where you select audio output parameters and audio filters, Split-and-Stitch, where you set these parameters; and Metadata, where you add metadata to the file.

Figure 3. The editable categories that comprise each encoder preset

The number of configurable compression parameters varies by format. The current shipping version of Engine uses the MainConcept H.264 codec, and configurable parameters are reasonably plentiful, with selections for profile, level, and entropy-encoding technique, as well as B-frame and reference-frame counts, support for adaptive B-frames, the number of encoding slices, and IDR frames.

In contrast, Telestream offers no configurable WebM encoding parameters. Nonetheless, though I didn’t test WebM in this round of high-end encoders, Episode Pro’s WebM encoding quality and speed were both excellent when I last checked. VP6-related configuration options include profile (VP6-E and VP6-S), complexity (normal and best), and alpha channel support. For Windows Media encoding, you can choose the WMV-9 and VC-1 codecs, with extensive control with both over profile, B-frames, encoding complexity, and the like.

Telestream offers a good range of video filters, including VBI import and export, telecine and inverse telecine, multiple color and brightness adjustments, and watermark overlay. You can preview the effects of your video filters in Engine’s Preview window, though the preview doesn’t show the qualitative impact of the selected codec and encoding parameters. Audio filters include high and low pass filters, speed, fade balance, and volume adjustments, with normalization available in the volume controls.

What I Tested

These are the specific aspects of Engine that I tested. For the most part, I compared Engine with one of the product’s most relevant competitors, Harmonic’s ProMedia Carbon, which costs about 40 percent more ($6,000 compared to Episode Engine’s $3,995) and is available only on Windows.

Deinterlacing Quality

Many producers have switched over to progressive source footage, particularly for streaming. If you haven’t yet, or you’re encoding older footage, you still care about deinterlacing quality. While Episode offers seven different deinterlacing techniques, none completed all 10 sequences in my deinterlacing test file without a hitch. To be fair, ProMedia wasn’t perfect in all sequences, but ProMedia’s overall results were slightly higher. Elemental Technologies, which I tested previously, still produces the best overall deinterlaced quality.

Performance

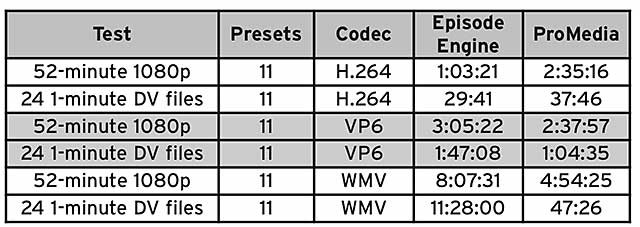

Table 1 contains performance comparisons between Engine and ProMedia Carbon running on the same HP Z800 workstation, which is configured with two 3.33 GHz six-core Intel Xeon X5680 CPUs and runs 64-bit Windows 7 with 24GB of memory.

Table 1. Performance comparisons between Episode Engine and ProMedia Carbon; times presented in hours:min:seconds format

I ran two tests: The first was a 52-minute, 1080p file encoded into 11 presets, and the other was 24 1-minute DV files encoded into the same presets. The presets were derived from some consulting work I had recently performed for a major network, so most of them are actually in use today. Because the presets were for adaptive delivery, I used constant bit rate encoding in all trials (single-pass because not all encoding tools support two-pass).

At Telestream’s suggestion, I ran my tests with hyper-threaded technology enabled, which opened up 24 cores for encoding. When producing H.264 and WMV output, I ran with six simultaneous encodes, again at Telestream’s suggestion. With VP6, I started at six, but CPU use was so poor that I jumped up to 12, which boosted CPU use and performance significantly. To complete the picture, with ProMedia Carbon, I ran with 12 simultaneous jobs enabled.

When encoding with Engine for the 52-minute tests, I tested with Split-and-Stitch enabled and disabled and used the faster time. Interestingly, with VP6, the codec that benefited the most from Split-and-Stitch operation when encoding a single file, using Split-and-Stitch in my large file test slowed encoding time from 3:05 to 5:24 (hours:min). While this technique can dramatically improve encoding speed in some selected operations, I wouldn’t enable it as a rule.

When producing H.264, Engine was faster in both tests, significantly so in the long file encodes. In this regard, note that we caught Harmonic near the end of a version cycle. The newer version, which I hope to test by June 2012, reportedly will have much faster H.264 encoding. If you’re reading this after June 2012, check StreamingMedia.com for updated Rhozet performance results.

Otherwise, ProMedia proved faster in all other tests, significantly so in single and multiple file WMV encoding.

Quality

I continued the ProMedia versus Engine comparison theme for my quality trials. To assess quality in each of the three formats, I compared files encoded at 640x360x29.97 at 240Kbps, which was the most aggressive configuration in the adaptive set.

With H.264, the current version of Engine uses the MainConcept codec, as does Rhozet. In these MainConcept versus MainConcept comparisons, Rhozet retained slightly more detail than Engine, though this would only be noticeable with side-by-side comparisons.

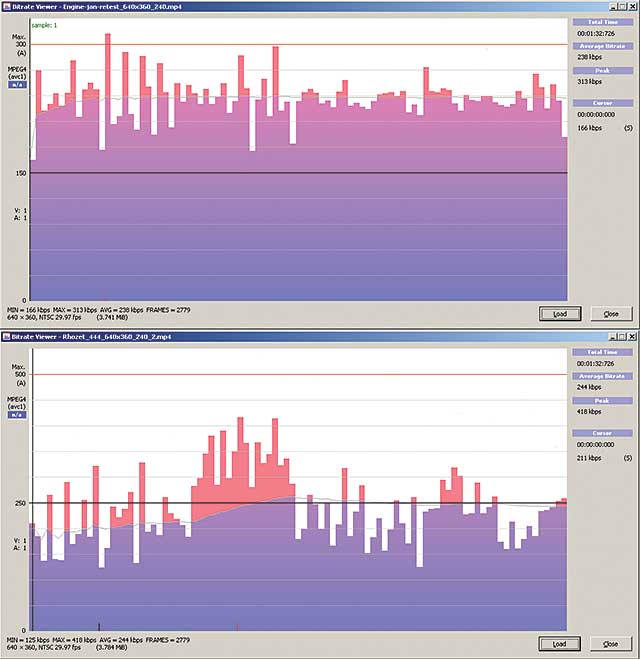

When comparing files qualitatively, I always make sure that data rates are within 5 percent of the target. As a further measure, I loaded the encoded files into Bitrate Viewer to check how closely each encoder adhered to the requested constant bitrate encoding. Figure 4 tells the tale, with Engine on top and Rhozet on the bottom.

Figure 4. Bitrate Viewer shows that Engine (on top) does a much better job producing the requested constant bit rate than ProMedia.

Unfortunately, the scales are different, which is slightly confusing. But if you look closely, you can see that Engine did a much better job maintaining the requested data rate throughout the file, as reflected in the faint blue wavy horizontal line. Specifically, with ProMedia, the average bitrate line is well below the 250Kbps mark during the front third of the file, which is all low motion. In contrast, Engine’s average bitrate is consistent throughout.

Since Rhozet allocated less data at the front of the file, it had more data to allocate toward the high-motion back end of the file, which no doubt improved quality compared to Engine, which allocated bits evenly throughout the file (as requested) and had no extra bits to spare. Overall, there isn’t a significant qualitative difference between the two tools using the MainConcept codec, though Engine was faster and did a better job achieving a consistent data rate.

In addition, in a soon-to-be-released upgrade, Telestream will add x264 encoding to Engine. Telestream shared an alpha version of that product, and the x264 files produced by Engine were clearly better than the MainConcept files, and they were nearly indistinguishable from Rhozet’s files. For performance, CBR accuracy, and H.264 quality, I would rate Engine slightly higher than ProMedia.

In contrast, with Windows Media encoding, Engine dropped significantly more frames than Rhozet using the default settings and produced lower quality frames when using both the WMV 9 and VC-1 codecs. When I adjusted the WMV related encoding controls to prioritize smoothness, Engine was able to produce a file to my target parameters without dropped frames using the VC-1 codec, but frame quality was awful. When I prioritized smoothness using the WMV9 codec, there were also no dropped frames, but the file data rate jumped to an unacceptable 500Kbps. Considering both quality and performance, I would avoid Engine for WMV encoding.

With VP6, the data rate profile and quality were very similar between the two encoding tools. As mentioned, ProMedia was the faster encoder, but not significantly so.

Summary and Conclusion

As an enterprise encoder, Engine performs well as a stand-alone product, excepting WMV encoding, with Split-and-Stitch encoding a nice option in targeted scenarios. The big caveat here is incomplete closed captioning capabilities. In a workgroup setting, the program’s simple clustering capability is a great feature, allowing users the ability to encode their own files in inexpensive versions of Episode, while tapping into an industrial strength parallel encoder when necessary.

Streaming Learning Center Where Streaming Professionals Learn to Excel

Streaming Learning Center Where Streaming Professionals Learn to Excel